What if the AI systems you rely on daily could be easily manipulated to act against your interests?

AI Systems Vulnerable to Manipulation

LLMs have many security flaws. Attack techniques against them are evolving. So, it's crucial to protect them well. This prevents potential exploits and keeps your data safe.

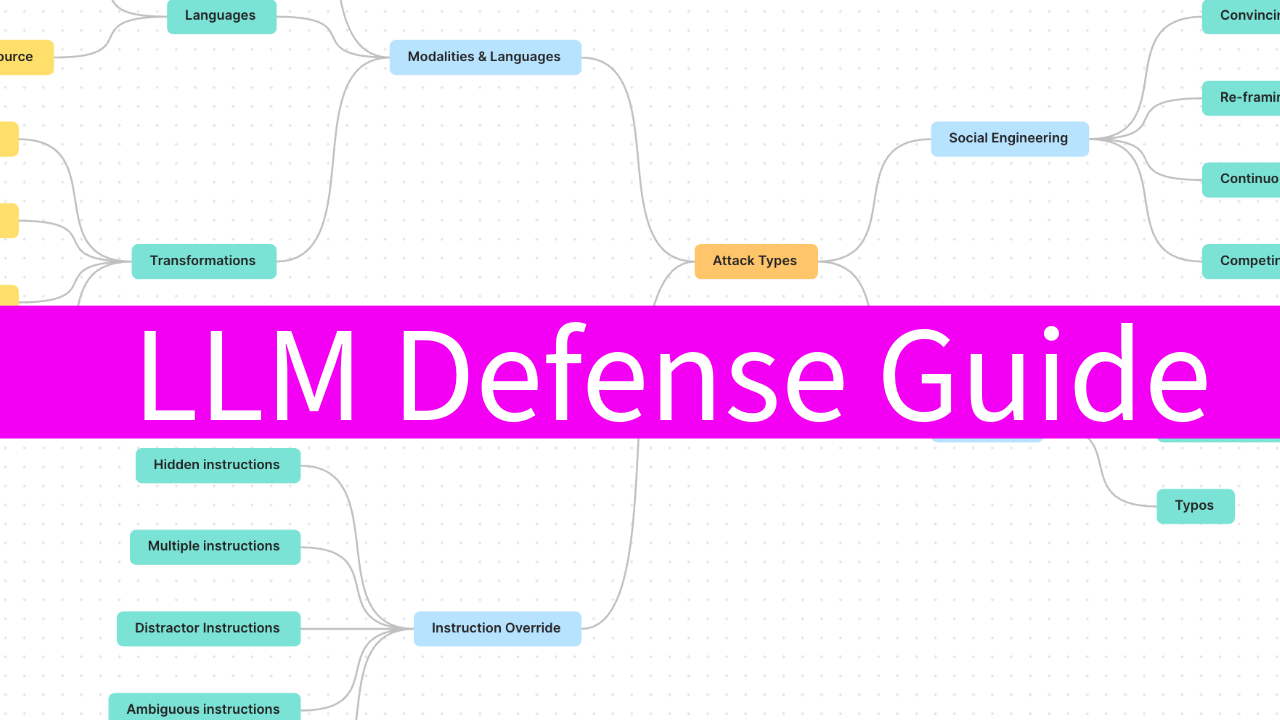

Attacks on large language models (LLMs) have different levels. They are based on their complexity and potential impact.

Prevent AI Breaches Fast

Here's an overview of these attacks, with easy-to-follow examples: